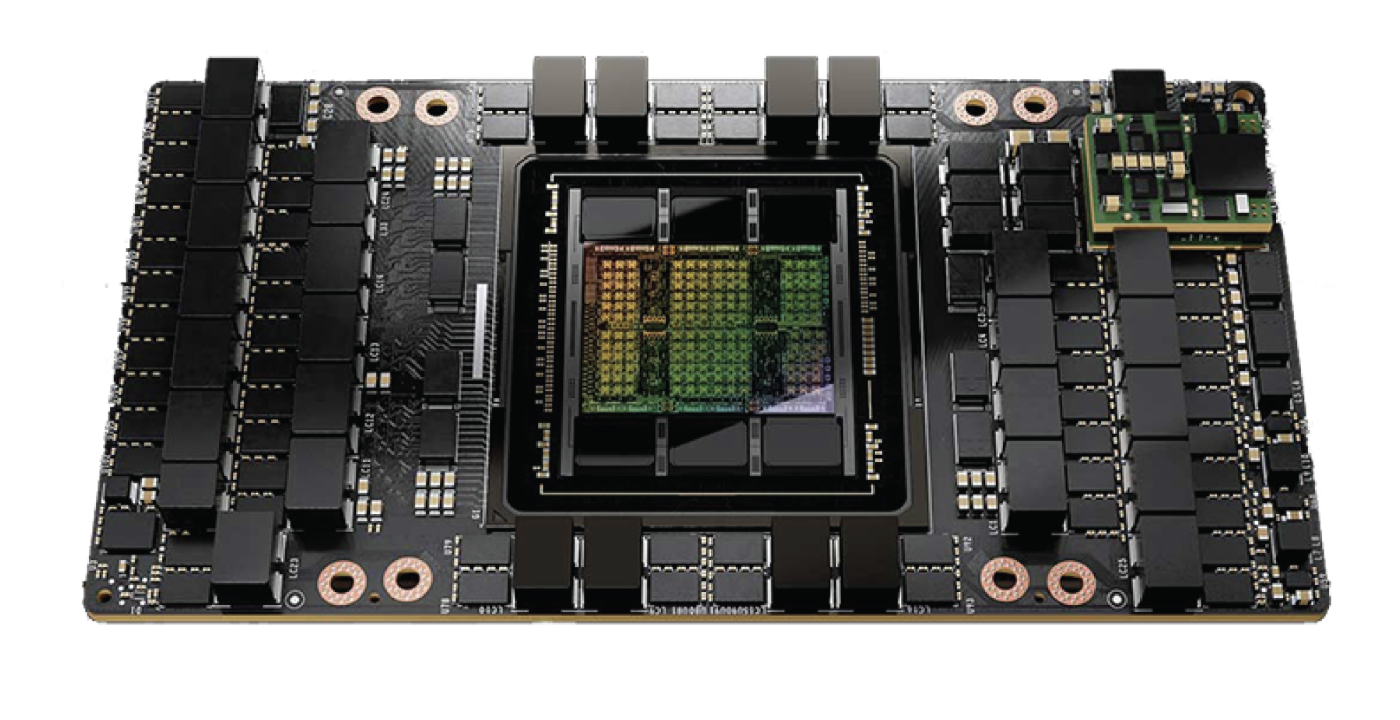

We are proudly introducing the NVIDIA H100 Tensor Core GPU

Unprecedented performance, scalability, and security for every data center.

A Quantum Leap in Accelerated Computing

Access unparalleled performance, scalability, and security for a variety of workloads by leveraging the capabilities of the NVIDIA® H100 Tensor Core GPU. Utilizing the NVIDIA NVLink® Switch System allows seamless connectivity for up to 256 H100 GPUs, enhancing the acceleration of exascale workloads. Additionally, the GPU incorporates a specialized Transformer Engine explicitly designed for handling trillion-parameter language models. Through a culmination of technological advancements, the H100 can achieve an impressive 30X acceleration for large language models (LLMs) compared to its predecessor, establishing itself as a leader in delivering cutting-edge conversational AI.

Safely Boost Workload Performance From Enterprise to Exascale

Transformational AI Training

The H100 boasts fourth-generation Tensor Cores and a Transformer Engine with FP8 precision, resulting in a remarkable 4X faster training speed than the previous generation for GPT-3 (175B) models. The integration of fourth-generation NVLink, providing a GPU-to-GPU interconnect speed of 900 gigabytes per second (GB/s); NDR Quantum-2 InfiniBand networking, enhancing communication between GPUs across nodes; PCIe Gen5; and NVIDIA Magnum IO™ software ensures efficient scalability spanning from compact enterprise systems to extensive, consolidated GPU clusters.

Real-Time Deep Learning Inference

Artificial Intelligence addresses a diverse range of business challenges through various neural networks. An exceptional AI inference accelerator must provide top-notch performance and exhibit versatility in accelerating a broad spectrum of these networks.

Exascale High-Performance Computing

The NVIDIA data center platform consistently achieves performance gains that surpass Moore’s law. The H100 introduces groundbreaking AI capabilities that significantly enhance the synergy of High-Performance Computing (HPC) and AI, expediting the time to discovery for scientists and researchers engaged in addressing the world’s most critical challenges.

Accelerated Data Analytics

The substantial time spent on data analytics frequently hinders AI application development. The dispersion of large datasets across multiple servers poses a challenge for scale-out solutions relying on commodity CPU-only servers, leading to compromised scalability in computing performance.

Enterprise-Ready Utilization

IT managers aim to optimize compute resource utilization in the data center at peak and average levels. Frequently, they implement dynamic reconfiguration of computing resources to appropriately size resources based on the specific workloads in operation.

Built-In Confidential Computing

Conventional confidential computing solutions are typically CPU-based, posing limitations for compute-intensive workloads such as AI and HPC. The NVIDIA Confidential Computing feature, integrated into the NVIDIA Hopper™ architecture, establishes the H100 as the pioneering accelerator with built-in confidential computing capabilities. This empowers users to safeguard the confidentiality and integrity of their data and applications in real time, all while harnessing the unparalleled acceleration capabilities of H100 GPUs.

Unparalleled Performance for Large-Scale AI and HPC

The Hopper Tensor Core GPU is set to drive the NVIDIA Grace Hopper CPU+GPU architecture, meticulously crafted for terabyte-scale accelerated computing and delivering a remarkable 10X increase in performance for large-model AI and HPC applications. The NVIDIA Grace CPU is designed with the adaptability of the Arm® architecture, creating a CPU and server architecture explicitly tailored for accelerated computing needs. The Hopper GPU is seamlessly integrated with the Grace CPU through NVIDIA’s high-speed chip-to-chip interconnect, boasting a bandwidth of 900GB/s, 7X faster than PCIe Gen5. This groundbreaking design promises to provide up to 30X higher aggregate system memory bandwidth to the GPU than current leading servers, delivering up to 10X enhanced performance for applications processing terabytes of data.